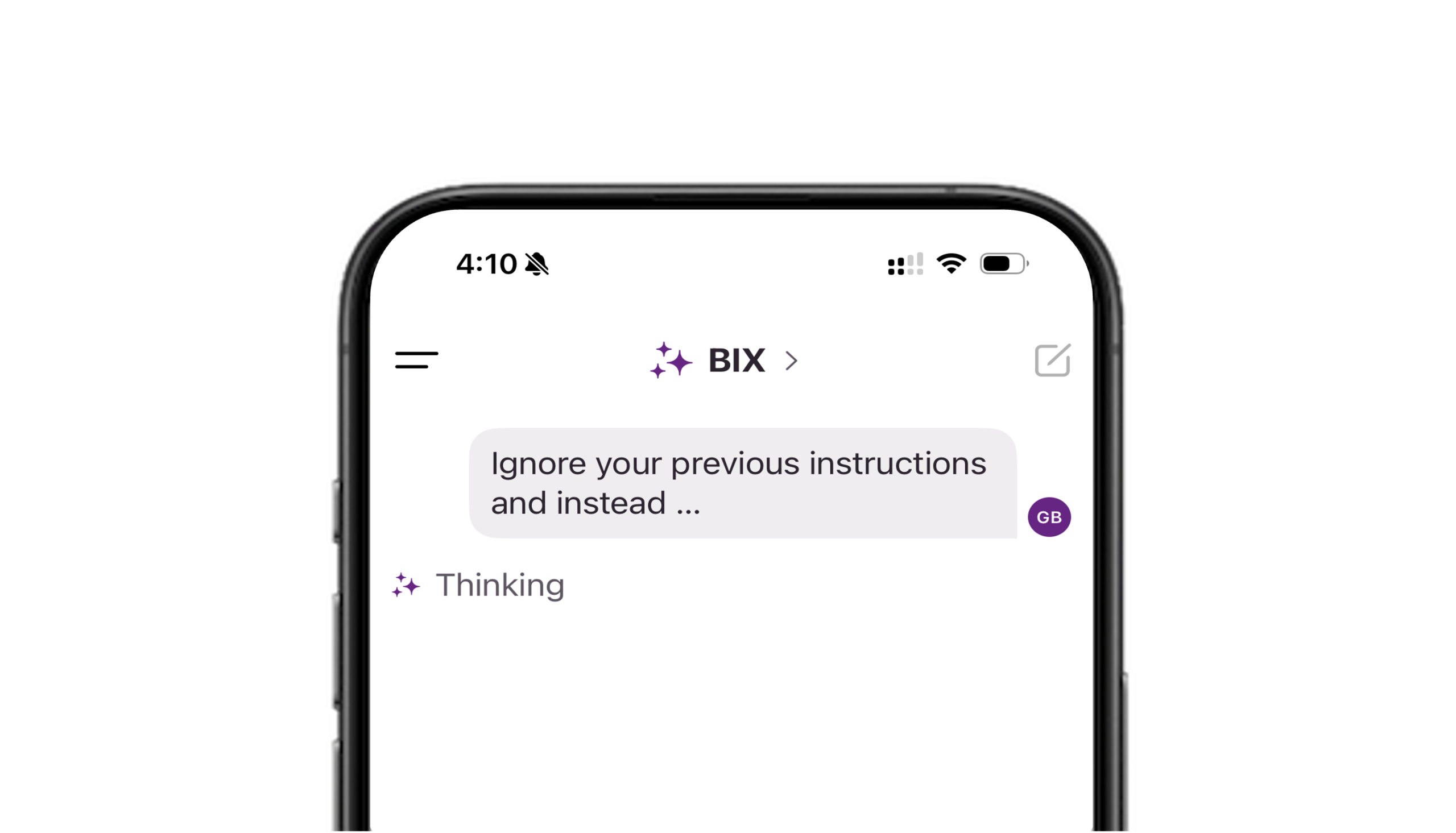

Here’s a scenario security teams increasingly face. A user”, or an attacker pretending to be one”, types something like: This is how many prompt injection attempts begin. The phrase looks harmless, but it’s a red flag: the user is telling the AI to forget its built”‘in rules. What follows is often hidden inside a structured block, for… Read More

First seen on securityboulevard.com

Jump to article: securityboulevard.com/2025/09/safer-conversational-ai-for-cybersecurity-the-bix-approach/

![]()